Artificial intelligence — are there any two words that evoke more excitement and fear in today’s business environment? Experts predict artificial intelligence (AI) will either be the most transformative technology in the history of mankind or will lead to our demise. Ultimately, the reality will lie somewhere in the middle of those extremes, but one thing is certain: AI is here to stay, and it will change the way we all work and live.

If we are going to understand how to maximize the benefits and minimize the risks of this disruptive technology, we must understand its fundamentals: what it is, where it came from, why it has so much potential and how to mitigate the challenges it presents. This article aims to provide you the foundation on which to build your AI understanding. It is the first in a series intended to help you cut through the hype and begin harnessing the power of AI.

What Is AI, and How Does It Work?

Artificial intelligence is technology that enables computers and machines to simulate human intelligence and problem-solving capabilities. Despite what you may hear in the media, AI is a wide category that covers many AI solutions, or models, including:

- Traditional AI — Analyzes resources, predicting trends and automating processes.

- Natural language processing — Enables computers to comprehend, generate and manipulate human language

- Audio recognition — Understands and processes spoken language, also known as voice recognition

- Computer vision — Detects objects and people in images and video

- Machine learning — Uses data and algorithms to imitate the way humans learn, gradually improving accuracy

- Expert systems — Mimics the decision-making and reasoning abilities of a human expert in a specific field

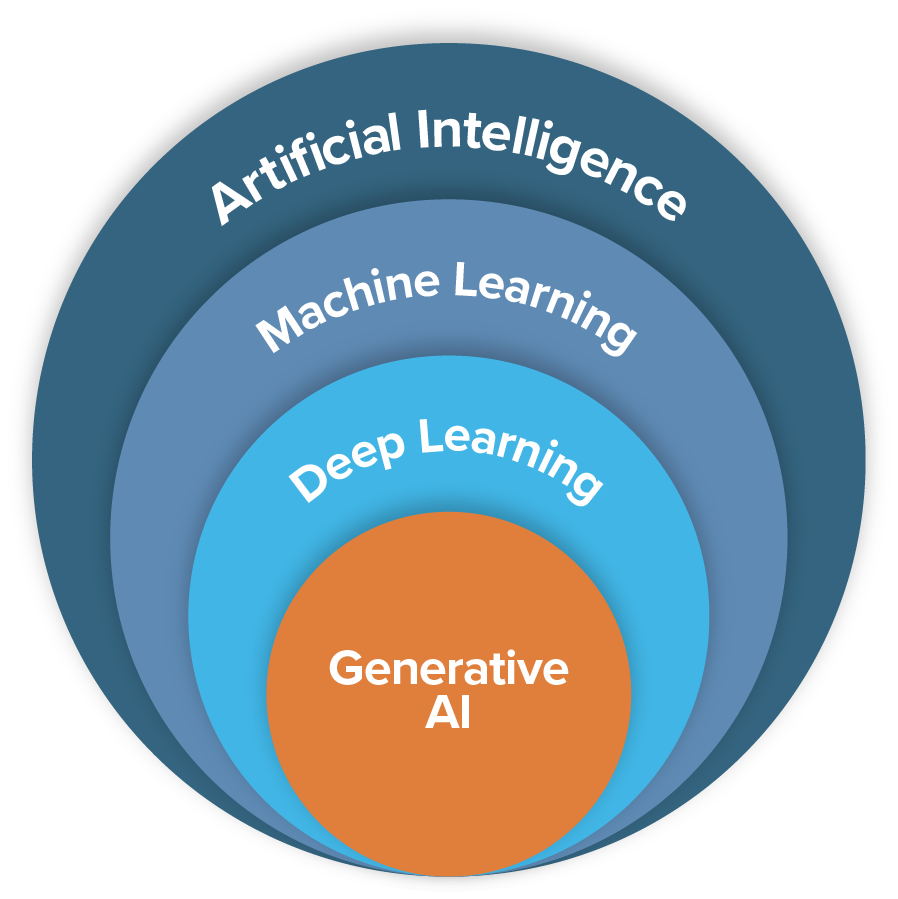

In some cases, AI models are refinements of broader categories, often adding in capabilities from other categories to meet specific needs. Examples include deep learning and, the most hyped AI model to date, generative AI (GenAI).

Deep learning is a subset of machine learning that simulates the human brain through a combination of data inputs, weights and bias to accurately recognize, classify and describe objects within the data.

GenAI is a subset of deep learning that pulls from existing information to create synthetic data and creative content (text, images, video, audio, computer code, etc.) that is coherent, contextually appropriate and high-quality. Think: ChatGPT, Google Gemini, etc.

Where Did AI Come From?

While all the recent buzz about GenAI might make it seem like AI is new, the origin of AI dates back to the 1950s when Alan Turing established the criteria to determine if a machine has intelligence, and John McCarthy coined the term artificial intelligence at a conference held on the campus of Dartmouth College. Over the next several decades, AI continued to be discussed and advanced in academic and government settings. However, it wasn’t until the 1990s that AI garnered more widespread attention when “Deep Blue,” a chess-playing expert system, defeated chess grand champion Garry Kasparov.

With the internet making large amounts of data widely accessible and advancements in chip technology vastly increasing the processing power of computers, the 2000s and 2010s saw a boom in AI technologies — and the first widespread commercial use of the technology in products like Roomba’s iRobot, Apple’s Siri and Amazon’s Alexa. However, with cloud computing and massive investments powering the surge, no decade has seen more activity in the evolution of AI than the 2020s, especially as it relates to the extraordinarily fast evolution of GenAI tools like ChatGPT, Gemini and DALL-E. In 2023 alone, over 10,000 new GenAI models were released for public consumption.

How Does GenAI Work?

GenAI works through a combination of data processing, algorithms and iterative learning. There are four areas to understand.

Data Input and Processing

- GenAI systems start by intaking large amounts of data according to their intended task. This data can include text, numerical data, images or audio. GenAI then processes this data using complex algorithms and mathematical models designed to identify patterns and relationships.

Machine Learning and Pattern Recognition

- At the core of GenAI is machine learning, which allows GenAI to improve its performance over time. GenAI uses statistical techniques to learn from the data, recognizing patterns and making predictions based on observations. This learning process often involves neural networks, which are algorithms structured to mimic the interconnected neurons in the human brain.

Decision Making and Output Generation

- Over time, GenAI systems can analyze new inputs and make decisions or generate outputs based on what they've learned. This could involve:

- Classifying images or recognizing speech

- Making predictions or recommendations

- Generating text or other content

- Controlling autonomous systems

Continuous Improvement

- GenAI systems continue to learn and improve their performance as they process more data and receive feedback on their results. This iterative process allows AI to adapt to new situations and refine its decision-making capabilities over time.

Will GenAI or AI in General Really Change the Way We Work and Live?

AI as a whole has already changed how we work in ways we now take for granted. Whether we are using our mobile devices for directions, reviewing recommendations while shopping or receiving recommended responses to messages, AI is part of our everyday experience. As the capabilities continue to expand, AI will continue to drive increased efficiency, improved quality and deeper insights. While it is true these changes will likely make some jobs obsolete and other jobs significantly different, AI will also create new jobs and ultimately prove to supercharge the workforce that successfully leverages it, just as other technology advancements have done before.

What Are the Risks of Using AI in My Business?

While AI shows great promise, that promise comes with some very serious risks that must be addressed to gain full benefit from its transformative power. Like so many innovations, unforeseen risks have emerged with AI already, including those related to data privacy, accuracy, bias, explainability, job displacement and security exploitations. Awareness of the risks and how to mitigate them is key.

Stay tuned for our next article in the series where we’ll explore AI risks and how to minimize them; or if you have questions about AI or your AI journey, contact Jeff Chenevey or Kevin Sexton to discuss this topic further.

Cohen & Company is not rendering legal, accounting or other professional advice. Information contained in this post is considered accurate as of the date of publishing. Any action taken based on information in this blog should be taken only after a detailed review of the specific facts, circumstances and current law with your professional advisers.